The design of robust AI algorithms and applications for industrial use from Confiance.ai

In our article “8 scientific challenges on the global approach for AI components with controlled trust“, we listed a certain number of scientific challenges identified by the Confiance.ai program. This article will be devoted to the challenge “Building trusted AI components” and the subcategory “Guaranteeing and measuring robustness”. We will discuss performance in the context of robustness and, in the face of disruptions or adverse attacks.

Robustness is a key element of the reliability of AI-based systems, just like explainability, interpretability, fairness, etc. This can be defined at the global level (the ability of the system to perform its intended function in the presence of abnormal or unknown inputs) or at the local level (the extent to which the system provides equivalent responses for similar inputs). Throughout the Confiance.ai program, various methods for the robust design of AI algorithms were identified and their contributions to industrial use cases were evaluated. Here are some of these methods.

Randomized smoothing (RS)

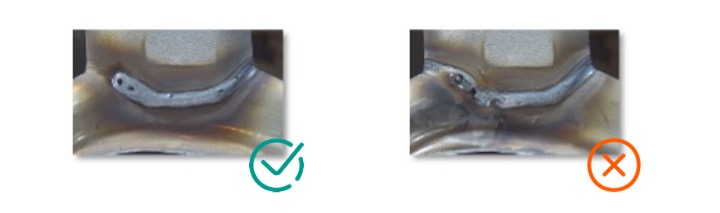

Randomised smoothing (RS) is a technique with theoretical guarantees of local robustness. It consists in making an average decision for several perturbed inputs from the same source. Let’s consider the use case of Renault to classify images of welds as good or bad:

Figure 1: Classification of welds (Renault use case)

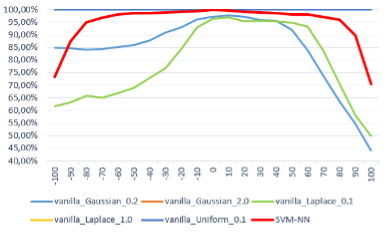

The following figure shows the performance of several weld classification models against adverse attacks: a network developed by Renault (SVM NN) and 5 other RS models trained for different noise typologies. While two of the models (vanilla laplace 0.1 and vanilla uniform 0.1 green and blue curves) perform less well than Renault’s model, the other three are perfectly robust (their three plots overlap at the 100% ordinate).

Figure 2: Comparison of the sensitivity of different models to disturbance variation

RS thus delivers very satisfactory results, but at the cost of heavier learning and more costly inference.

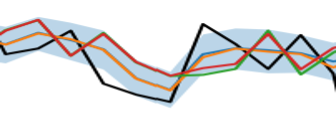

In addition to classification, we have also experimented with RS for regression problems, in response to an Air Liquide use case. This involves predicting gas demand by Air Liquide customers. In this case, RS can be used to provide certified intervals for demand forecasting, thus improving visibility and demand management.

Figure 3: The curve in black shows the true demand by Air Liquide customers, while the other curves show predictions by the RS for different noise typologies. The blue zone is the RS certification zone: it is supposed to contain the true values (the black curve) with a high probability.

Adversarial training

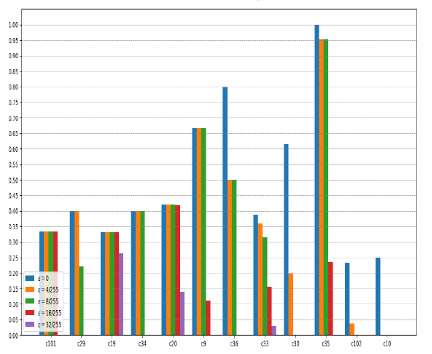

Adversarial training consists of injecting adversarial examples during training, often generated by state-of-the-art attacks such as PGD or Auto-PGD. The resulting model, however, will be resilient to any type of attack or perturbation. Adversarial training certainly improves robustness, but inevitably leads to a drop in accuracy compared with conventional training (no-free lunch!). Several variants of this method have been used in Confiance.ai, such as TRADES, to maximize the compromise between robustness and accuracy.

Figure 4: Performance comparison between 12 neural network models trained with the TRADES method against adversarial attacks of intensity ɛ for several ɛ values. C101, C29 etc designate different training datasets from different angles of view.

Lipschitz networks

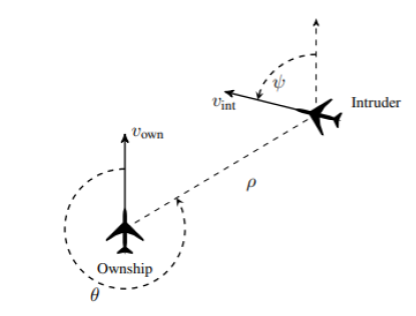

Most neural networks are Lipschitz, but calculating the Lipschitz constant is generally difficult (NP difficult). Lipschitz networks satisfy the Lipschitz property; however, their Lipschitz constants are computable by design. These models are therefore locally robust. The performance of Lipschitz networks has been evaluated for various Confiance.ai use cases, such as Airbus’ ACAS-Xu.

Figure 5: The ACAS-Xu on-board anti-collision system uses dynamic programming to avoid aircraft collisions. Five actions are possible at any given time (Clear-of-Conflict; Weak Left; Weak Right; SL: Strong Left; Strong Right). An AI-based system must choose an optimal action from these 5.

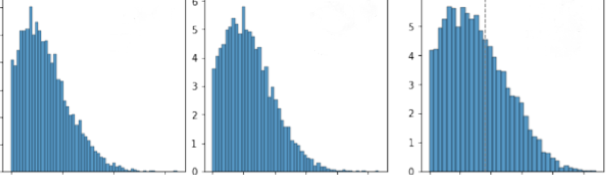

The following figure shows the certification scores of three Lipschitz neural network models trained with different training hyper-parameters. The most robust models are those with the highest certification scores. The best model obtained is therefore the one on the right.

Figure 6: Certification scores for three Lipschitz network models trained on the Acas-Xu use case.

References :

- Livrable Confiance.ai Robust & Embeddable Deep Learning by Design

- Rendre une IA robuste au bruit : ActuIA 2022, Rodolphe Gelin, Augustin Lemesle, Hatem Hajri